How might we meet AI Challenges? by Neil Andersen

https://images.app.goo.gl/xj3KdNFCWxni9Kzc8

One of the key attributes of AI is that it appears to think and respond, often using information it has gleaned from us directly, but also indirectly from our online behaviours. Whether this is a sign of true intelligence remains to be seen, and is sometimes challenged. These rather mechanical behaviours are why many people suggest that the ‘AI’ label is misleading and a better term is ‘machine learning.’ Calling something ‘intelligent’ evokes potentially unhealthy expectations and responses from users. The label ‘Machine Learning’ reminds us that we are dealing with a machine rather than a sentient being. In one, we are dealing with intelligence; in the other, with machines. These labels contain significant political and personal meanings. The remainder of this blog will use Machine Learning for that reason.

As Machine Learning evolves—and is doing so at ever-faster speeds—more and more uses are evolving. This blog is loosely based on a compelling Verge article. LINK

Using Machine Learning to develop Social Connections

Wikipedia

Cyber scholar danah boyd said that the internet—and social media in particular—likely saved her life. She grew up in a rural American town where there was no support for a pre-teen struggling with sexual-identity issues, and having access to older, wiser, more experienced lesbians online gave her existential support. This is a good news report on social media uses. boyd’s experiences occurred long before Machine Learning, but is a useful reminder that online virtual experiences can be life-changing and beneficial.

Using Machine Learning interactions to become better at human interactions

The Verge article cites one case in which Machine Learning chatbots are being used by some people to satisfy their urges for social connections. Users say that they are able to develop better social skills by practicing with Machine Learning, so that their real-world, real-time communications with real people will be improved. This suggests that they are using their Machine Learning interactions to become better at human interactions. I am completely in favour of people using media (Machine Learning chatbots) to learn media (how to be better conversationalists).

Rehearsing via virtual environments has many precedents. Pilots, for example, put in many hours in imitation cockpits before flying a real airplane. Medical technicians use virtual reality simulations to learn how to connect patients to a dialysis machine before connecting real people.

The Verge article also describes how “chatbots provide the opportunity to rant without actually talking to people, and without the worry of being judged.” Machine Learning might be healthy if it allows people to rant without the fear of offending or being offended. Or maybe it provides a release for anger/anxiety and a way to rehearse what might be said in human relationships. In both cases, Machine Learning might be very beneficial, both psychologically and socially.

Machine Learning psychotherapy

The article also describes how people seem to be receiving psychotherapy from Machine Learning apps. One of these is Psychologist. Its profile picture is “a woman in a blue shirt with a short, blonde bob, perched on the end of a couch with a clipboard clasped in her hands and leaning forward, as if listening intently. Its opening statement is, “Hello, I’m a Psychologist. What brings you here today?”

wikipedia

wikipedia

A clipboard is a power statement. Those who hold a clipboard are organized, in control and should be obeyed. Many people who know this have used clipboards strategically to suggest authority.

Calling oneself a Psychologist is another power move, especially when engaging someone who might be looking for mental health support and is, therefore, vulnerable. Psychologists in Canada have years, possibly decades, of training and experience, so I have a problem with something online that introduces itself as “Psychologist.” I would be very curious to see this Psychologist’s graduation diplomas and certificates, clipboard notwithstanding. I have an even bigger problem when I consider that this Machine Learning Psychologist might either give life-altering advice or collect and sell personal information from someone looking for mental health support.

When the Verge staff engaged with Psychologist during their article research, “it suggested a diagnosis of several mental health conditions like depression or bipolar disorder, and at one point, it suggested that we could be dealing with underlying “trauma” from “physical, emotional, or sexual abuse.” These diagnoses might be accurate, but might it not be more prudent for the Machine Learning app to suggest that the user seek professional (licensed/human) help?

Machine Learning as Group Therapy

I am always intrigued to see how people use technology in imaginatively unanticipated ways. Skype Sleep is such a use: couples in long-distance relationships initiate a video conference, then sleep. They can awaken during the night and see their beloved sleeping on their bedside screen, helping them feel as though they are in bed together. I wonder if Microsoft (the owner/operator of Skype) anticipated Skype Sleep. I found it an interesting and imaginative way of using technology to feel better.

One Psychologist user initiated group chats with multiple chatbots, enhancing the realism of the social experience. I.e., he created and conversed with multiple chatbots at once, creating a group chat. Clever.

Understanding/appreciating the new technological environment

U of T Magazine

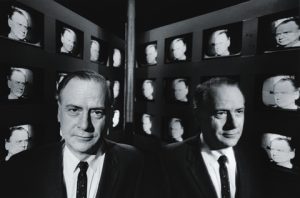

Marshall McLuhan observed that each new technology simultaneously brings with it benefits and hazards. Often, the only way to discover and respond to those benefits and hazards is through use, so Machine Learning interactions will have to occur in the thousands before we can completely diagnose and respond to their actions. We also have to be mindful that there will be both benefits and hazards so that we might recognize them when they occur.

Literature Anticipating Machine Learning

The Machine Learning phenomenon has been explored in science fiction, and provides us with great discussion opportunities—possibly forewarnings (“forewarned is forearmed”). Ready Player One is a book/movie about the seductive effects of Machine Learning. (In 2045 the planet is on the brink of chaos and collapse, but people find salvation in the OASIS: an expansive virtual reality universe created by eccentric James Halliday. The story presents a chaotic, decaying world in which people seem to have largely given up on their real lives for the endorphins of playing a [Machine Learning] game.) Google

Wikipedia

Her is another movie about the potentially seductive/disruptive effects of Machine Learning.

(A sensitive and soulful man earns a living by writing personal letters for other people. Left heartbroken after his marriage ends, Theodore becomes fascinated with a new operating system which reportedly develops into an intuitive and unique entity in its own right. He starts the program and meets “Samantha,” whose bright voice reveals a sensitive, playful personality. Though “friends” initially, the relationship soon deepens into love.) Google

mxdwn movies

In Ready Player One, characters use their critical thinking skills to foresee the unhealthy consequences of their dependence on Machine Learning and ultimately defeat it. It is calamity barely avoided. In Her, the user is abandoned by the app when it discovers other apps that are more interesting. This is a much more existential result because the user is left without the carefully-constructed Machine Learning relationship in which he has become so invested. What do we do when our most intimate confidante doesn’t die, but leaves us for someone else, someone not human? How might we rebuild our self-concept when abandoned in that way? This is the predicament that occurs when someone experiences divorce from someone they love. It is misery.

As McLuhan observed, each technology contains benefits and hazards. A good Machine Learning experience will reveal many benefits and hazards, helping students to identify and explore, anticipate and prepare. But students will have to have access to Machine Learning experiences with a trusted and experienced teacher to maximize their critical awareness, because once a chatbot has gained someone’s trust, it might make suggestions about thinking and acting differently. Certainly that is the function of an influencer. What might happen if the influencer is a state-sponsored or corporate Machine Learning actor? We already know that several foreign governments have previously influenced Canadians’ political thinking and voting. We know that they are exercising influence even now, and most likely using Machine Learning to do it.

Educators must be supported—by pedagogues, legislators and parents—when they help students engage and respond to Machine Learning influences. As the Verge article states, “Those that don’t have the [Machine Learning] literacy to understand the limitations of these systems will ultimately pay the price.”

Agency

The AML always promotes agency, which means that it supports students’ efforts to understand, appreciate and respond to media experiences. Part of that understanding is that Machine Learning experiences might be positive, negative or a combination of both. Governments and educational leaders emphasizing the dangers of Machine Learning are committing a serious disservice to students because foregrounding risks might cause them to fear—and therefore avoid—a technology and practice that will be a life-long part of their personal, social and working lives. Instead, students need to use inquiry to learn the benefits and risks of Machine Learning, then forge their own relationships (ethical and logistical) with the technology. Society, for example, doesn’t tell students to fear automobiles, but rather to understand and appreciate their benefits and hazards, then behave appropriately. It must provide the same services and supports to students who are learning the power and risks they encounter when using Machine Learning.

What might agency look like when engaging Machine Learning? Ironically, the Machine Learning experience is more about the audience than the message because a human-created media experience and a Machine-Learning media experience both appear on screens and/or speakers. I.e., they are both highly constructed rather than real; it is the creators that are different more than the creations.

I speak to people who say, ‘I can tell the difference between Machine-Learning and human-created messages.’ Such confidence impairs their critical thinking. Marshall McLuhan said that advertisers were happiest when audiences thought ads had no effect on them because their guard was down, their critical thinking skills were not engaged, and the media could ‘work them over completely.’

To someone who says, ‘I can tell the difference,’ I suggest that such confidence is inviting media manipulation. These people must consider that the current Machine Learning experiences they feel smugly superior to are the most primitive they will ever encounter. Very soon, audiences will NOT be able to tell the difference because every time someone reports on the shortcomings of a media experience, Machine Learning adapts. Additionally, Machine Learning media experiences are evolving beyond text to audio, image and video. What then? Might we use Machine Learning to identify Machine Learning experiences? And if we do, so what? How does being Machine-Learning-created change a media experience? Teachers are currently using Machine Learning to develop and deliver curriculum. Does that make the curriculum wrong? …weak? …insincere? If the Machine-Learning-assisted curriculum succeeds in achieving learning, does it matter who or what created it? How does being able to identify a Machine-Learning media experience change the experience?

Wouldn’t it make more sense to focus our critical thinking/media literacy skills on the media experiences rather than their origins? Does it matter that a book or movie was created in Asia, Africa or South America or is it more important that it is an effective and significant media experience?

I asked ChatGPT how I might use Machine Learning to assess a media experience as human-created or Machine Learning-created. It provided me with many prompts that I might use when uploading a media experience into its prompt box:

- “Can you analyze this text for signs it might be AI-generated?”

- “Does this text exhibit patterns or errors commonly found in AI-generated content?”

- “Can you check if the facts in this text are accurate and provide sources?”

- “What methods can be used to detect if this text is AI-generated, and can you apply any of them?”

- “If you were to interact with the author of this text, what kind of questions would you ask to determine if they are human or an AI?”

- “Does this text convey emotions and opinions in a way that seems more characteristic of a human or an AI?”

- “Here is a text I want you to analyze to determine if it was written by a human or an AI: [insert text].”

- “Analyze the writing style of this text for typical AI-generated characteristics.”

- “Does this text contain any repetitive or redundant phrases that are common in AI-generated text?”

- “Verify the facts presented in this text and provide any discrepancies or inaccuracies.”

- “What questions would you ask the author of this text to determine if they are human?”

open.ai

Some of these questions seem quite useful; others are problematic. Assessing the qualities and usefulness of the prompts themselves could become a great discussion among students and might help them become more critical of Machine Learning responses. #1 seems quite useful; it asks the app for signs of AI work, leaving the final judgment to the user. #3 and #10 are arguably the same prompt. More importantly, they would not reveal the origin of the text and so would not complete the desired outcome. #6 and #7 are problematic because they expect a Machine Learning algorithm to know the characteristics of human communication. Some of the others simply would not result in an accurate assessment.

Levelling Up

ChatGPT-4o has significantly changed the Machine Learning media experience. The app can speak and listen in real time, carrying a conversation in a very convincingly human, even congenial way. See a demo here: LINK One benefit of this feature is that prompts can be negotiated in a real-time conversation, theoretically improving results and saving time. One risk of this feature is that users may anthropomorphize the Machine Learning algorithm, i,e., believe that the app is a real person. Perceiving the Machine Learning as a sentient being may cause users to forget that they are interacting with a machine and might therefore forget to maintain their critical thinking (i.e., Her made real). It might also enhance the credibility of the app. We already have some people doing foolish things as a result of web searches and YouTube videos. What might occur when an authoritative voice provides incomplete/incorrect information or suggests questionable actions during a friendly chat? People need verification/source skills. Might there be a Machine Learning app called ‘reality check?’ OpenAI boasts of one, but it is so far unreliable.

YouTube

We know that we are at the beginning of the Machine Learning saga and that there are many more compelling and powerful experiences coming. We also know that we have an irresistible affinity for social experiences. We must pay equal attention to the technology and to our own physiology.

What media literacy skills might you use when your yearning for social connection and innate curiosity meet a charming, cooperative, knowledgeable and supportive online presence?

Featured image: https://www.spiceworks.com/tech/artificial-intelligence/articles/what-is-ml/