Algorithms and Media Literacy

by Helen J. DeWaard

Algorithms and media aren’t usually considered together. Algorithms are thought to be mathematical and computational sequences that manage our computer data. Media are related to popular culture as expressed through text, image, audio, or video. Both are current areas of focus for educators in the classroom as computational thinking, coding, and fake news compete for attention in classroom curriculum at all grade levels. When we dig beneath the surface of these two concepts, we see threads that bind them.

nbcnews.com

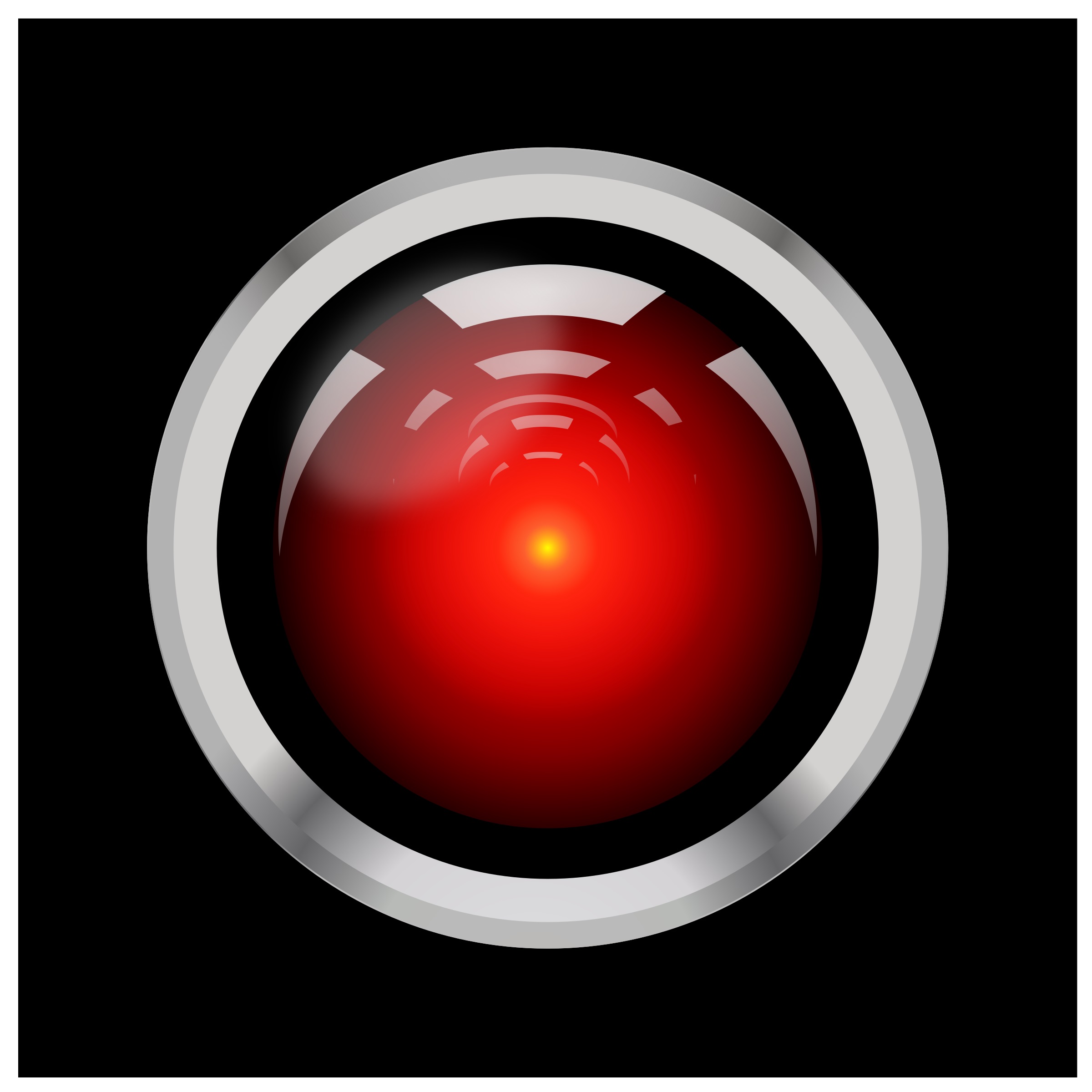

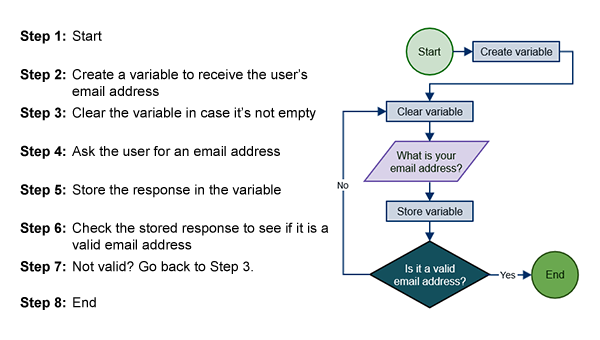

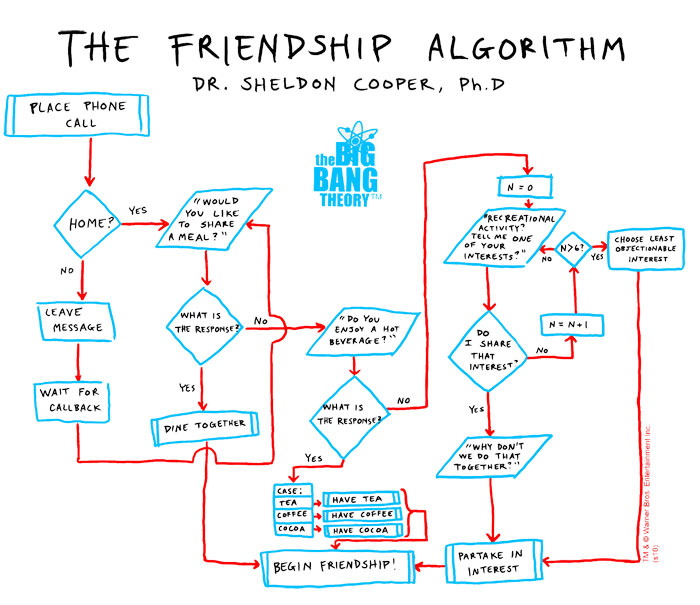

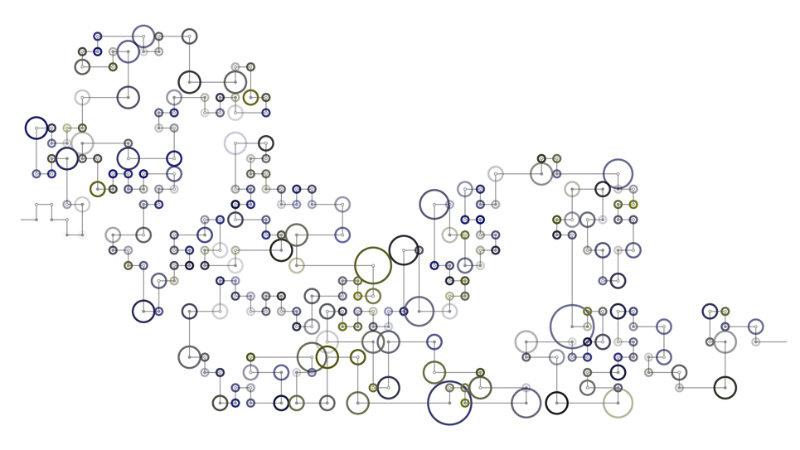

Algorithms are defined as a set of rules, sequential steps, or a series of actions that create a desired outcome. In computational and electronic terms, it’s a set of directions, written in code, that determine the actions and reactions that occur when using a computer (Farnham Street Media Inc., 2018). These algorithms are the basis of every computer search and every news feed for individual users.

Algorithms—and the computer code upon which they rely—are often thought to be neutral, but they are “actually imbued with the agenda, biases, and vulnerabilities of the programmer” (Cohen, 2018, p. 145). While many consider algorithms as code-conductors, it is a human equation with “humans on either end: the programmer and the end-user. The platform acts as the interactive mediator and media host” (Cohen, 2018, p. 145). As educators of media literacies, it’s time to teach students to interrogate algorithms in order to understand how they operate. (Cohen, 2018).

sloanreview.mit.edu

Media encompass a wide range of text, image, audio, and video productions. When specifically examining digitally created and consumed electronic texts in all their variations, the “algorithmic media environment is a custom, unique environment that changes as the user changes, but also with the culture surrounding the user.” (Cohen, 2018, p. 145). The connections between media and algorithms occur, according to Cohen (2018) because “users are often unaware that news media and informative content consumed on social media news feeds or timelines are uniquely customized to each user’s preferences” (p. 140).

gumbo.blogspot.com

gumbo.blogspot.com

The challenge for educators who teach media literacy is to raise awareness of media environments controlled by algorithms since these are different “from screen media ecologies by incorporating data collected both online and offline to present consumers with unique media feeds, advertisements, and the distribution order of media content” (Cohen, 2018, p. 140). The outcome of an individual’s search for information or news results in a “media environment that best suits each user’s preferences” (Cohen, 2018, p. 143).

While informed and critically aware media users may see past the resulting content found in suggestions provided after conducting a search on YouTube, Facebook, or Google, those without these skills, particularly young or inexperienced users, fail to realize the culpability of underlying algorithms in the resultant filter bubbles and echo chambers (Cohen, 2018).

Media educators should examine new tools and mechanisms to explore how algorithms are impacting users’ actions in social media. Cohen (2018) suggests that students become aware of “how social media platforms and digital distribution outlets use the algorithm to increase time spent online by incorporating the collection, quantization, and repurposing of user data, including most importantly, the “negative” media data activity, or the time calculated off the platform” (p. 148).

stor

storiesbywilliams.com

Media literacy education is more important than ever. It’s not just the overwhelming calls to understand the effects of fake news or addressing data breaches threatening personal information, it is the artificial intelligence systems being designed to predict and project what is perceived to be what consumers of social media want. Cohen (2018) emphasizes that “contemporary media literacy education needs to be more intentionally situated in humanizing digital media, so as to interrogate the programmers’ intention and possible shortsighted deployments” (p. 148). Originally developed by the Association for Media Literacy to explore media messages with a critical lens, it’s time to revisit the Eight Key Concepts of media literacy with an algorithmic focus. This will critically position the analysis of algorithms in the realm of media literacy education.

The Unbias Fairness Toolkit is just such an effort. Created by a collaborative team in the United Kingdom and shared by Giles Lane (Civic Thinking for Civic Dialogue), this teaching resource provides a “civic thinking tool for people to participate in a public civic dialogue” (Lane, 2018). It is an effort to examine “issues of algorithmic bias, online fairness and trust to provide policy recommendations, ethical guidelines and a ‘fairness toolkit’ co-produced with young people and other stakeholders” (Lane, 2018).

The formation of the Unbias Fairness Toolkit comes at a time when populations are questioning social media services—not just their ability to manipulate and warp political outcomes—but to safely and securely manage personal data. With an increasing sense of isolation and disconnection from cultural and social experiences (Lane, 2018; Turkle, 2012), the Unbias Fairness Toolkit provides a “mode of critical engagement with the issues that goes beyond just a personal dimension (“how does this affect me?”) and embraces a civic one (“how does this affect me in relation to everyone else?”” (Lane, 2018). Results about the Unbias Fairness Project are shared in the Our Key Findings blog post.

NICE

Literacy in today’s online and offline environments “means being able to use the dominant symbol systems of the culture for personal, aesthetic, cultural, social, and political goals” (Hobbs & Jensen, 2018, p 4). Being civically engaged and civil in actions in/about social media spaces is susceptible to the polarizing forces of current algorithms. Media literacy education can do much to counter the influence of algorithms in current media environments (Cohen, 2018). “Operationalizing media literacy to consider the algorithm as media environment prepares for citizens a way to imagine digital media platforms distinctly from collective media distribution.” (Cohen, 2018, p. 148).

Understanding the human side of algorithmic equations when using social media resources can be promoted with the application of media literacy frameworks. As educators, it’s time to consider algorithms and social media equations as a central part of our media curriculum.

References

The Association for Media Literacy. (n.d.). What is media literacy? Retrieved December 18, 2018 from https://aml.ca/keyconceptsofmedialiteracy/

Cohen, J. (2018). Exploring echo-systems: How algorithms shape immersive media environments. Journal of Media Literacy Education, 10(2), 139-151.

Farnum Street Media Inc. (2018). Mental models: The best way to make intelligent decisions (109 models explained). [website]. Retrieved December 18, 2018 from https://fs.blog/mental-models/

Hobbs, R., & Jensen, A. (2009). The past, present and future of media literacy education. Journal of Media Literacy Education, 1(1), 1-11.

Lane, G. (2018, March 14). Civic Thinking for Civic Dialogue. [weblog]. Retrieved on December 18, 2018 from https://gileslane.net/2018/03/14/civic-thinking-for-civic-dialogue/

Our Future Internet. (2018, May 14). Our future internet: free and fair for all. Retrieved from https://youtu.be/ovAvjDgaqWk

Our Future Internet. (2018, November 21). Unbias project key findings. Retrieved from https://www.youtube.com/watch?time_continue=15&v=3r4O36vd7TU

Turkle, S. (2011). Alone Together. Philadelphia PA: Perseus Basic Books.

Unbias Project. (n.d.). About us. Retrieved from https://unbias.wp.horizon.ac.uk/about-us/