AI Representation: Conflicting Values by Neil Andersen

Neil Andersen’s new blog, the first in a series, comments on Google’s Gemini AI through a Verge article that reveals how conflicting commands can impact the accuracy of generated images. And he offers thought-provoking inquiry questions for the classroom .

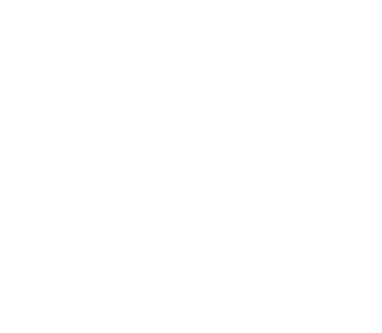

Google has apologized for its Gemini AI representing historically-inaccurate images. A Verge article provides several examples (Founding Fathers, American woman & Nazi) of its inaccuracies. See the examples of Nazis that Gemini AI offered below.

The errors seem to arise from competing algorithm commands. Google and other AI providers have come under criticism for providing biased images, specifically dominantly Caucasian faces, when asked for sample images. It has therefore modified its algorithms so that they provide more diverse—gendered and racial—examples. But this instruction seems to have either overridden or ignored the algorithm’s commitment/ability to provide historically-accurate imagery. These examples suggest that the diversity instruction has more weight than the historical accuracy instruction, which allows us a little insight into an otherwise black box algorithm.

In any case, these examples provide students with wonderful inquiry and discussion opportunities.

What might students discuss regarding the historical in/accuracy of these results?

What other examples of algorithmic bias might they discover?

*

Sample inquiry questions with students:

- How offensive are the images below? I.e., knowing the German Nazis’ historic treatment of non-Arians, should Asians and Africans be upset and offended by the Nazi imagery?

- Or is this just another example of a young technology that will quickly evolve?

- What advice might students give Google about ways to ‘fix’ the inaccurate and potentially-offensive images?

- Is this an important issue, or might it just be an attempt by The Verge to attract readers to its website and ads, i.e., clickbait?

- How might The Verge’s history of social justice-related articles help students answer that question?

- These questions might be developed into a debate: BIRT The Verge exploits controversial issues to increase its readership and profits.

These lesson ideas are most suitable to Intermediate and Secondary levels (ed.)